A note from the Editor:

This article begins an indefinite series on employing and leveraging technology at the small unit level. Much has been written, and will continue to be written, on the use of data science at the operational and strategic levels. We should all be paying attention to these rapidly evolving capabilities and what they mean for our country and our allies. But we cannot overlook the needs of our Marines who have dirt on their boots and are staring across the last hundred yards to the objective.

Presently, unit leaders and junior officers face numerous challenges and problems in garrison and training, specifically the scarcity of time, which can be alleviated with the proper implementation of available software and technology. In the future we will face an increasingly accelerating and expanding battlefield, which will strain our cognitive load across each echelon of our force. Being adaptable and flexible in combat, requires rehearsing for the fluidity of war in garrison and training. Remaining attuned to cutting edge developments in both software and hardware, while staying agile enough to rapidly employ emerging technology, will prove decisive. Brilliance in the basics remains the foundation of all that we do, but increasing the speed in which we achieve mastery, or employ our Marines is a critical advantage that we must seek out and dominate. Technology will greatly assist us in this pursuit and set conditions for future success.

With this in mind, this article marks the Connecting File’s humble attempt to assist our community in gaining this decisive advantage. We are fortunate to have earned the support of several infantry and reconnaissance officers who have advanced degrees in these topics, but we hope to hear from many of you. What are the problems you are facing? What problem have you solved at your level? Where is efficiency needed? What can we automate? What must retain a human in the loop? Send us your observations and ideas, even if they are to only inform our inquiry and exploration. We look forward to advancing the line alongside you.

Stay lethal.

Making maximum use of every hour and every minute is as important to speed in combat as simply going fast when we are moving. It is important to every member of a military force whether serving on staffs or in units—aviation, combat service support, ground combat, everyone. A good tactician has a constant sense of urgency. We never waste time, and we are never content with the pace at which events are happening. We are always saying to ourselves and to others, “Faster! Faster!” (MCDP 1-3)

This article will frame your perspective on what you should know as you consider integrating tools that are coming to the infantry that rely on data science and AI. It will also serve as a touch point and reference guide to baseline forthcoming discussions. In future articles, we will go into detail to explain how AI systems in development work and the implications for each. Advancements in AI are happening rapidly, and we do not have time to be left out; in 2017, a team of Google researchers released an academic paper proposing a new neural network structure. In 2024, ChatGPT and other models like it are commonly known and used in daily and professional life. Those models work according to the suggestion in that 2017 paper, illustrating the rapid rate of development in the AI world.

To be clear, AI is not the solution to all our warfighting problems. However, it can enhance our warfighting capability and deserves the attention of warfighters. Just like knowing the capabilities and limitations of our weapon systems, we need to know the capabilities and limitations of digital technology to employ it effectively and artfully. Please note that everything we discuss here is about software, agnostic to the hardware (tablet, drone, radar).

To clarify terminology, let’s begin with unofficial definitions.

Data science, in this article, refers to the use of data to gain insights and knowledge. By that definition, calculating the average of your company’s PFT scores by platoon to see how they compare, or using an AI-enabled UAS to classify (recognize and label) a vehicle it is flying over as a T-72 are both examples of data science.

Now, let’s turn to AI, which is a subset of data science. The DoD defines AI as “the ability of machines to perform tasks that normally require human intelligence.” (Department of Defense Data, Analytics, and Artificial Intelligence Adoption Strategy, June 2023). There are further subsets to AI that we will explain in follow-on articles, but for now we will stay at the high-level. The diagram below outlines an overview of the components of data science, where AI is considered as a subset of other disciplines within the data science umbrella:

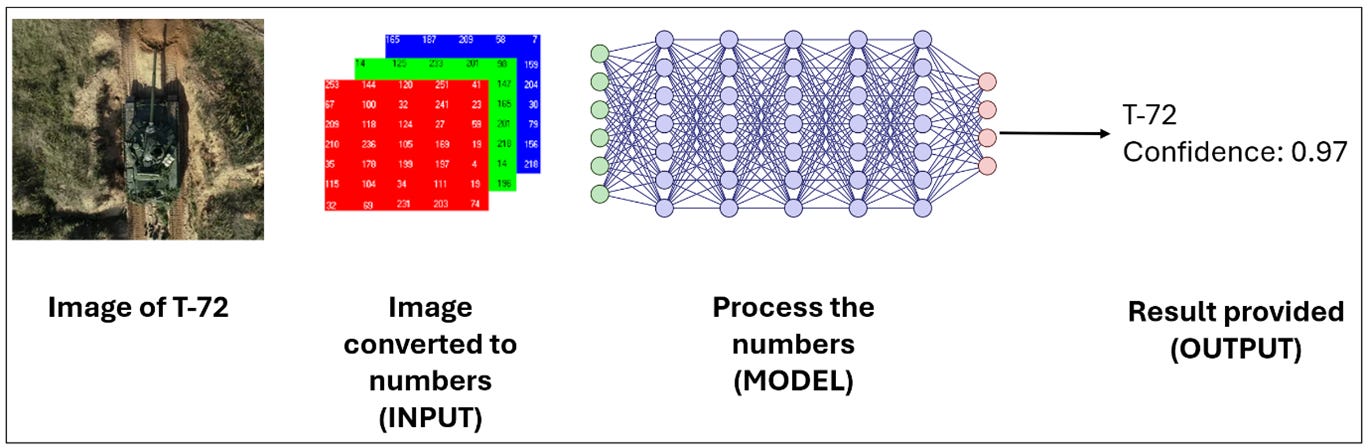

Here is how all AI works: There is a base model, which is nothing more than a mathematical equation. A user takes an input, passes it through the model, and receives an output, like this example of an image classifier:

Thus, there are three critical characteristics to gauge the effectiveness of AI: reliable input data, the type and quality of the model, and the utility and interpretability of the output. This article seeks to contextualize each of those requirements.

Quality Control

For all AI applications, the quality of the input data determines the quality of the output. Beyond just user input data, some AI models require data to be trained on during development. To illustrate the importance of data, suppose we want software integrated in our UAS that classifies (recognizes) enemy vehicles from the UAS feed. Now, suppose instead of teaching a UAS how to identify a T-72, you are going to teach aspiring 0352s how to identify a T-72. The natural way to do this is to show them pictures of T-72s from all angles, during the daytime and at night, and point out identifying characteristics, all the while telling them “That is a T-72”. We need to do the same thing for our model, which means we need a lot of pictures of T-72s. As developers continue to train models, they are constantly testing their ability to do the task at hand. Once developers are happy with the performance of the model, it is now a capability we can use. As soon as the UAS flies over a T-72, the model will likely recognize that vehicle.

That same training cycle applies to technology that is at the cutting edge of AI, to include chat models like ChatGPT. Though the training process is simple, having enough quality data to train and test the model on is the most critical step in development. Takeaway 1: Data drives everything in the AI world. Data collection and data security are critically important. Thus, as our profession becomes more data-centric, we must realize that things like intelligence reports, schemes of maneuver, fire support plans, logstats, and perstats, are all examples of data that will feed models that we use.

Gaining Efficiency

The type and quality of the model are responsibilities of the developers and do not need to be a focus of the user. However, understanding the types of AI models and how they work will directly inform your use of those models. Some models can do parts of your job better than you can. Augmented Reconnaissance and Estimate of the Situation (ARES), developed by Captain Ryan Helm, is a great example of this (you might find ARES in the TAK store as ARES or Small Unit Mission Planner). This tool (when given the right data) will find landing zones, beach landing sites, routes to the objective, and support by fire positions, with speed and precision that is impossible to achieve by an 0302 with an MCH or a 1:50,000 and a Ranger Joe. ARES uses optimization to mathematically determine, based on geospatial data, TCMs that otherwise require a unit leader to find. Thus, I’m confident in saying ARES can better find covered and concealed routes to the objective than I can.

Compare that to a model like ChatGPT, which uses a neural network to make predictions based on the input and generate a best-guess output. While the ChatGPT-like model is better at determining if an image has a T-72 in it than I am, it is not going to be better than me at route planning. Therefore, as new tools are implemented, a surface-level understanding of how the model works is required. The litmus test for determining when AI models can be effectively applied to our profession falls nicely along the lines of the science and the art of war. If it lives in the science (terrain analysis, equipment capabilities and limitations, etc.) then AI tools could likely be made to do it better and faster than we can. This frees us up to live in the art. AI tools can quickly define the bounds of what is possible and allow us to focus on how to operate within those bounds. Takeaway 2: We need to examine everything we do and determine where digital technology can improve our current processes, which is everything on the science side of war.

Inspect What You Expect

Understanding how a model generates its output is essential for making the right decisions based on that output. For example, take a model like the ARES Landing Zone (LZ) finder. It uses a set of predefined rules and parameters, such as minimum size, maximum slope, and nearby obstacles, to identify all possible landing zones. The output is repeatable because running the model with the same data will always produce the same result. It is also explainable because the rules and parameters used by the model are fully transparent.

Now, compare that to a model that identifies an image of a tank as a T-72. This type of model works differently. It compares the tank image to other images it has learned from and selects the closest match. In this case, the output is predictive because it is based on patterns and is essentially an educated guess. However, the output is uninterpretable because there is no way to fully understand how the model decided to label the image as a T-72.

These two types of outputs are fundamentally different and should influence the decision of the user accordingly. Most of these software solutions give you an immediate 70% solution which facilitates tempo but does not remove the need to verify assumptions. Takeaway 3: The output of software cannot be blindly trusted, but that does not mean it isn’t value added.

Taking the Initiative

It is easy to dismiss AI tools for military applications as someone else’s responsibility to figure out. However, unlike hardware, software can be made into a usable system employed by infantryman relatively quickly and simply. AI can revolutionize parts of the infantry fight. Consider these example use cases of AI applied at the infantry battalion level.

Automatically generate a recommended ISR matrix for UAS assets given an enemy situation, friendly mission, and assets available that optimally covers likely enemy positions.

Identify battle positions that maximize field of fires while remaining covered prior to conducting a leader’s recon of a planned defensive position.

Estimate the full composition and disposition of enemy forces given all data collected on enemy positions.

Red cell your friendly defensive situation by using models like ARES to better inform possible enemy COAs.

The common theme these examples share is that, if AI is used effectively, the time to process information into actionable intelligence can be dramatically reduced. Effective use of AI can increase the speed of decision-making, which means it can directly increase our operational tempo.

AI tools, specifically made for reconnaissance and targeting, are already being employed in Ukraine and Gaza, and they will be used in our next fight. To ensure we have the right tools, we need infantryman like you to understand the capabilities and limitations of AI and be willing to test and scrutinize these tools as they arrive to the fleet.

Captain Kevin Benedict is an infantry officer and currently serving as an Operations Research Analyst at Manpower & Reserve Affairs. He can be reached at kevin.benedict@usmc.mil.

Great one